ARTIFICIAL INTELLIGENCE SOCIAL MEDIA RECOGNITION FAILURE

The least desirable events that tails back to back starting with the corona invasion in 2019. The mechanism of AI Social Media recognition in countering the fake content uploads failed then also and now too.

LATEST AI SOCIAL MEDIA RECOGNITION FAILURE

The Afghan-Taliban content upload is increasing on the social media platforms which contain visuals of the local Afghans’ mid-air drops from the US aircraft.

Though much of the videos are genuine, the potential risky content floats online in abundance.

Social Media Artificial Intelligence fails to curb misleading content uploads on the internet.

AI SOCIAL MEDIA THREAT DETECTION SYSTEM

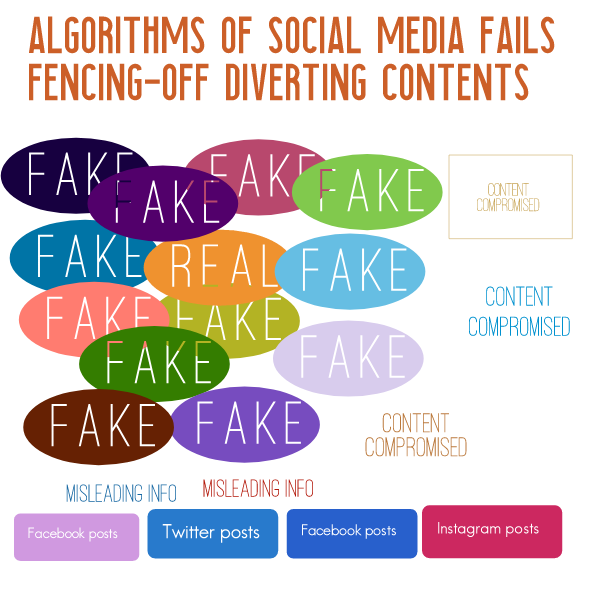

It becomes evident that the algorithms of the Social Media Artificial Intelligence fail to fence off in filtering out the potentially harmful content on the social media platform.

If you are expecting an instant set-up of AI-mechanism in filtering the content uploads promptly by the digital platform moderators, is perhaps a difficult task to achieve now.

TO-DO CHECK

-

No Re-Upload

After the content removal, considering a tech-play inclusion to avoid re-post may work out well.

Just like Twitter’s re-tweet, reposting is also quite an easy task if posted by creating a new account, or by another account.

All like free lunches and the same motivation is behind sharing the free available posts.

-

Unanimous Content-Flagging

Before the fake content removal, the post passes through several eyes facilitating more reposts and shares.

Removal of the misleading content from the web happens late and it does not guarantee further reposting.

When is the unauthenticated Content is identified?

The potential fake content remains online until:-

- The AI moderation, like object and scene detection, recognizes the false post and marks it;

- The content comes to light of the moderator after the multiple flagging by the users

It is then that it comes to the attention of the platform moderator when the content is initiated for removal.

The damage stops after volume contents are detected by AI-powered moderation. But by then it’s too late to mend as multiplies in views and shares.

Detect Root-fakes Content

Root-fake contents pose high magnitude threat and challenge on social media platforms like –

- National Security threat

- Communal clashes

- Reputation Damage

- Confusion & Havoc

- Financial Loss

- Loss of Lives

SOLUTION – AI SOCIAL MEDIA RECOGNITION

Like, machine technology and AI are man-made utilities, solutions to source from the maker.

- Algorithm Mastering – Not quickly, but gradually the algorithms may be acquainted in recognizing the markers that indicate content alteration.

- Video Authenticator – Microsoft engages in developing a system that works on the face-forensic concept, known as the Video Authenticator. This might be the artificial intelligence module that may hold the potential of detecting harmful and misleading content online.

OUTSMARTING TECH

The floaters of the deep-fake content present online on social media attains expertise in over trumping the system of AI recognition which identifies the misleading content.

- The researchers at the University of California were able to analyze the fact, that the current system AI recognition technology facility that identifies fake posts is easily over-run.

- The other insufficiency that’s beyond control, is the content uploading part.

- With the high power speed in uploading contents scope an easy edit and re-uploading of contents which easily evade algorithm blocking.

CONSEQUENCES MISLEADING CONTENT

Exploiting Reputation

A report from the NYU Stern Center for Business and Human Rights highlights the various methods of content deployment in democratic activity.

The prime threat is deep-faking videos during elections to repute-harm the political candidate.

The report mentions chaos-creating intel about China and Iran joining Russian.

Paid Lead-Astray Information

A UK PR company paid cash ransom to the France and Germany origin Youtubers and Bloggers to facilitate the false propaganda about the high fatality rate caused due to the vaccines of Pfizer and BioNTech pharmaceutical companies.

It was found out that the incident took place in May and the PR entity based out of the UK had a Russian connection.

CO-ORDINATION – AI SOCIAL MEDIA RECOGNITION

The responsibility of validating the credibility of the content needs to be shared. The Youtube, Twitter, Facebook, and other Social networking sites having volume users should have an internal system protocol to filter the uploaded contents by the users.

The engagement of Artificial Intelligence in Social Media needs an effective content recognition rehearsal in the identification of misleading uploads.

CONCLUSION

We may expect an efficient AI technology reform in the coming years. But this doesn’t mean that the moderators of the social media have an absolute control failure, they still have their commendable grip on this concern.